This article is co-authored by David Joy, Senior Sales Staff Engineer for Cockroach Labs, Harsh Shah, Staff Sales Engineer for Cockroach Labs, and Krishnaswamy Venkataraman, Technical Specialist – Azure for Microsoft.

So far in this series we have explored how to deploy CockroachDB on Microsoft Azure, discussing production options, deployment strategies, survivability goals, and more. Now, in part 4, we'll dive deeper into best practices for operating CockroachDB on Azure. We'll cover backups, compute and storage considerations, security, data locality, performance testing and survivability goals.

Whether you're a database administrator, DevOps engineer, or developer, this guide will provide practical insights to optimize your CockroachDB deployment on Azure.

1. Backups: How They Work and Best Strategies

Implementing an effective backup strategy is critical for data protection, disaster recovery, and compliance. In CockroachDB on Azure, backups safeguard your distributed data while leveraging Azure's robust services. Understanding the types of backups available, their use cases, and best practices helps you tailor a strategy that meets your organization's needs.

Types of Backups in CockroachDB

1. Full Backups: Captures the entire state of your database at a specific point in time.

Use Cases:

Establishing a baseline backup.

Periodic backups for comprehensive recovery points.

Why Choose Full Backups:

Simplifies the restore process since all data is contained in one backup.

Essential for disaster recovery scenarios requiring a complete database restoration.

Check out this step-by-step guide on our blog.

2. Incremental Backups: Stores only the data changes since the last full or incremental backup.

Use Cases:

Frequent backups to minimize potential data loss.

Reducing storage and network resource usage.

Why Choose Incremental Backups:

Efficiently updates backups without duplicating unchanged data.

Enables shorter backup windows and less impact on database performance.

3. Locality-Aware Backups: Backs up data in the same geographic region where it resides.

Use Cases:

Compliance with data residency regulations.

Optimizing backup and restore performance by reducing latency.

Why Choose Locality-Aware Backups:

Ensures data does not leave its designated region, adhering to legal requirements.

Minimizes costs associated with cross-region data transfers.

Locality aware backups are recommended for multi-region workloads that are in production.

Best Practices

1. Define a Clear Backup Strategy

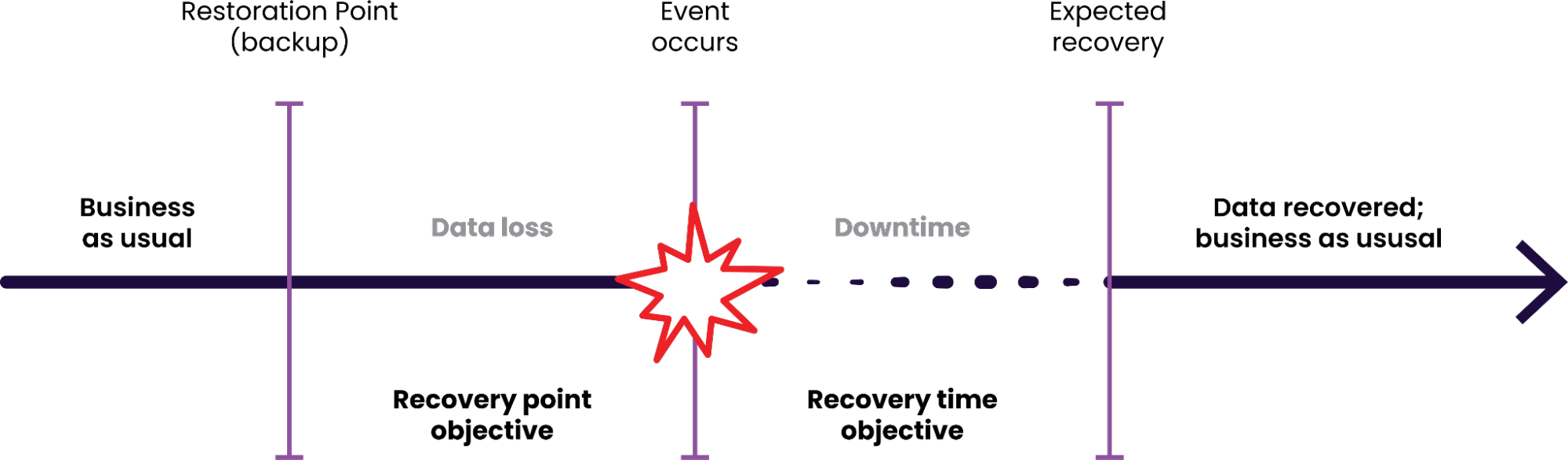

Align with Business Objectives: Determine your Recovery Point Objective (RPO) and Recovery Time Objective (RTO) to decide backup frequency and retention.

Combine Backup Types: Use full backups periodically with regular incremental backups in between to balance completeness and efficiency.

2. Implement Locality-Aware Backups

Maintain Data Residency: Store backups in the same Azure region as your CockroachDB cluster to comply with regional laws.

Optimize Performance: Reduce latency and improve backup and restore speeds by keeping data local.

3. Secure Your Backups

Access Control: Use Azure's Shared Access Signatures (SAS) and role-based access control (RBAC) to restrict who can access backup data.

Encryption: Ensure data is encrypted at rest and in transit using Azure's encryption features.

4. Automate and Monitor Backups

Scheduling: Use Azure Automation to schedule backups during off-peak hours to minimize impact on performance.

Monitoring: Leverage Azure Monitor to track backup operations and set up alerts for failures or unusual activity.

5. Regularly Test Restores

Validate Backup Integrity: Periodically perform test restores to ensure backups are valid and usable.

Recovery Drills: Simulate disaster recovery scenarios to prepare your team for actual incidents.

6. Optimize Costs

Storage Tiers: Utilize Azure Blob Storage access tiers based on how frequently backups need to be accessed.

Lifecycle Management: Implement policies to automatically transition older backups to cooler storage tiers or delete them when they are no longer needed.

7. Consider Compliance and Governance

Data Retention Policies: Define how long backups should be kept to meet legal and business requirements.

Audit Trails: Keep detailed logs of backup and restore operations for compliance auditing.

2. Compute Type, Storage Type, and Network Best Practices

Compute Recommendations

Choosing the right VM sizes is crucial for performance:

VM Series: Use the Dv5 or Ev5 series for a balance of CPU, memory, and network performance.

CPU and Memory: Ensure that each node has sufficient CPU and memory. A good starting point is 4 to 8 vCPUs and 16 to 32 GB of RAM per node. For cluster stability, Cockroach Labs recommends a minimum of 8 vCPUs , and strongly recommends no fewer than 4 vCPUs per node1.

Scale Out vs. Scale Up: CockroachDB is designed to scale horizontally. It's often better to add more nodes with moderate specs than a few nodes with high specs.

Storage Recommendations

Disk performance can significantly impact database performance:

Premium SSDs: Use Azure Premium SSDs or Ultra Disks for low-latency and high-throughput storage.

Disk Size: Larger disks often have better IOPS and throughput. Consider over-provisioning disk size to gain better performance.

Networking Best Practices

Efficient networking ensures low latency and high throughput:

Virtual Network (VNet): Deploy all CockroachDB nodes within the same VNet to optimize network performance.

Proximity Placement Groups: Use proximity placement groups to ensure nodes are physically close within Azure data centers, reducing latency.

Network Security Groups (NSGs): Configure NSGs to allow necessary traffic between nodes and restrict unwanted access.

Accelerated Networking: Enable accelerated networking on VM network interfaces to reduce latency and improve throughput.

3. Security Best Practices

Authentication and Authorization

Use Secure Connections: CockroachDB uses TLS for secure communication between nodes and clients. Ensure certificates are properly managed.

Role-Based Access Control (RBAC): Implement RBAC to control user permissions within the database.

CREATE ROLE app_user;

GRANT SELECT, INSERT ON DATABASE your_database TO app_user;

Azure Integration

Azure Active Directory (AAD): Integrate CockroachDB authentication with AAD for centralized identity management.

Key Management: Use Azure Key Vault to store and manage encryption keys and secrets securely.

Network Security

Private Endpoints: Use private endpoints to restrict database access to internal networks.

Firewalls: Implement firewalls to control inbound and outbound traffic to the CockroachDB cluster.

Compliance and Auditing

Compliance Standards: Ensure your deployment meets necessary compliance standards like GDPR, HIPAA, or PCI DSS.

Audit Logging: Enable and regularly review audit logs to monitor access and changes to the database.

4. Data Locality: Best Practices Between Local and Global Tables

Data locality is a powerful feature in CockroachDB that lets you control where your data physically resides. In a multi-region Azure deployment, leveraging data locality optimizes performance and helps comply with data residency regulations.

Benefits of Data Locality

Reduced Latency: By storing data close to users, you minimize network delays, leading to faster query responses.

Regulatory Compliance: Keeps data within specific geographic boundaries to meet legal and compliance requirements on data residency.

Efficient Resource Utilization: Reduces cross-region data transfer, saving on bandwidth costs and improving performance.

Implementing Data Locality in CockroachDB on Azure

CockroachDB allows you to specify data locality at the table or row level, aligning with your application's needs.

Global Tables

Use Case: Data that needs to be accessible with low read latency across all regions, such as configuration settings.

Behavior: Data is replicated across all regions, providing fast read access everywhere but may incur higher write latencies due to cross-region synchronization.

Regional Tables

Use Case: Data primarily accessed within a specific region, like user data localized to a country.

Behavior: Data is stored and replicated within the specified region, optimizing for low-latency reads and writes locally.

Regional by Row Tables

Use Case: Rows within a table need to reside in different regions based on a column value, ideal for multi-tenant architectures.

Behavior: Each row is stored in the region specified by the

crdb_regionvalue, allowing dynamic data placement.

Best Practices

Define Your Regions: Start by specifying the regions in your database.

Set a Primary Region: Designate a primary region for default operations.

Analyze Data Access Patterns: Understand where your users are and how they interact with data to decide on the appropriate locality settings.

Combine Localities: Use a mix of global, regional, and regional by row tables to balance performance and complexity.

Monitor and Adjust: Regularly assess performance metrics and adjust locality settings as your application's needs evolve.

Compliance Considerations: Ensure that sensitive data complies with regional laws by restricting it to specific regions.

Leveraging Azure's Global Infrastructure

Azure Regions Alignment: Deploy CockroachDB nodes in Azure regions that match your data locality requirements.

Networking Setup: Use Azure's virtual network peering to ensure low-latency connections between regions.

Availability Zones: Distribute nodes across availability zones within regions for increased resilience.

Effectively managing data locality in CockroachDB on Azure enhances performance, meets compliance needs, and provides a better user experience. By strategically placing data where it's needed most, you optimize your application's responsiveness and reliability.

5. Performance: Best Testing Practices

Benchmarking

Use Realistic Workloads: Simulate real-world scenarios using tools like CockroachDB's workload tool or TPC-C benchmarks.

Baseline Performance: Establish a performance baseline before making changes to identify impacts.

Monitoring Tools

CockroachDB Admin UI: Use the built-in UI for real-time metrics on queries, CPU usage, and network I/O.

Azure Monitor: Integrate with Azure Monitor for a centralized view of performance metrics across your infrastructure.

Optimization Techniques

Indexing: Create appropriate indexes to speed up query performance.

Query Optimization: Analyze slow queries using the EXPLAIN statement and optimize them.

Connection Pooling: Use connection pooling to manage database connections efficiently.

Load Testing

Stress Testing: Apply high load to the database to test its behavior under stress.

Failover Testing: Simulate node failures to test the cluster's resiliency and recovery time.

6. Survivability Goals: Best Practices

Achieving high availability and fault tolerance is crucial for enterprise applications. CockroachDB's distributed architecture inherently supports survivability, but adhering to best practices enhances resilience when deploying on Azure.

High Availability Configuration

Replication Factor:

Number of Replicas: CockroachDB requires at least three replicas of the data for a basic deployment which enables the cluster to survive a single node failure without downtime. Increase the number of replicas to tolerate more node failures without encountering downtime.

Multi-Region Deployment:

Distribute Nodes Across Regions: Deploy CockroachDB nodes in multiple Azure regions to withstand regional outages.

Leverage Availability Zones: Within each region, spread nodes across different availability zones to mitigate zone-level failures.

Data Replication and Placement

Replication Constraints:

Control Data Placement: Use zone configurations to specify where replicas reside.

Ensure Diversity: This setup ensures replicas are distributed across three regions, enhancing survivability.

Survivability Goals Mapping:

Survive Zone Failures: With replicas in different zones, the cluster endures zone outages without downtime.

Survive Region Failures: By spanning regions, the cluster remains operational even if an entire region fails.

Network Configuration

Optimize Inter-Node Communication:

Use Azure's Global VNet Peering: Provides low-latency, secure connectivity between regions.

Configure Network Policies: Ensure network security groups (NSGs) allow necessary CockroachDB traffic (ports 26257 and 8080 by default).

Monitoring and Failover

Implement Robust Monitoring:

CockroachDB Metrics: Utilize the built-in Admin UI and export metrics to Azure Monitor for comprehensive insights.

Set Up Alerts: Configure alerts for node availability, disk usage, and latency issues to respond proactively.

Automated Healing and Failover:

Enable Auto-Rebalancing: CockroachDB automatically rebalances replicas when nodes fail, maintaining cluster health.

Test Failure Scenarios:

Node Failure: Simulate by decommissioning a node.

Zone/Region Failure: Use Azure's testing tools to mimic outages and observe cluster resilience.

Backup and Disaster Recovery

Regular Backups:

Schedule Consistent Backups: Complement survivability features with regular backups stored in Azure Blob Storage.

Disaster Recovery Planning:

Document Recovery Procedures: Have clear, tested steps for restoring services in catastrophic events.

Cross-Region Restores: Practice restoring backups to different regions to prepare for regional failures.

By strategically configuring replication, distributing nodes across regions and zones, optimizing network settings, and implementing thorough monitoring, you enhance CockroachDB's inherent survivability on Azure. Regular testing and backups further ensure that your database remains resilient in the face of failures, aligning with enterprise-grade availability requirements.

A database optimized for enterprise scale

Operating CockroachDB on Azure offers a robust, scalable, and resilient platform for your applications. By following these best practices in backups, compute and storage configurations, security, data locality, performance testing, survivability, and schema management, you can optimize your deployment for enterprise-scale workloads.

Ready to unlock the full potential of your enterprise data strategy with CockroachDB and Azure? Visit here to speak with an expert. You can also learn more about our partnership by visiting our website.